StarCloud's AI Sends Witty Greeting to Earth

“Hello, Earthlings! Or, as I prefer, a mesmerizing blend of blue and green!”

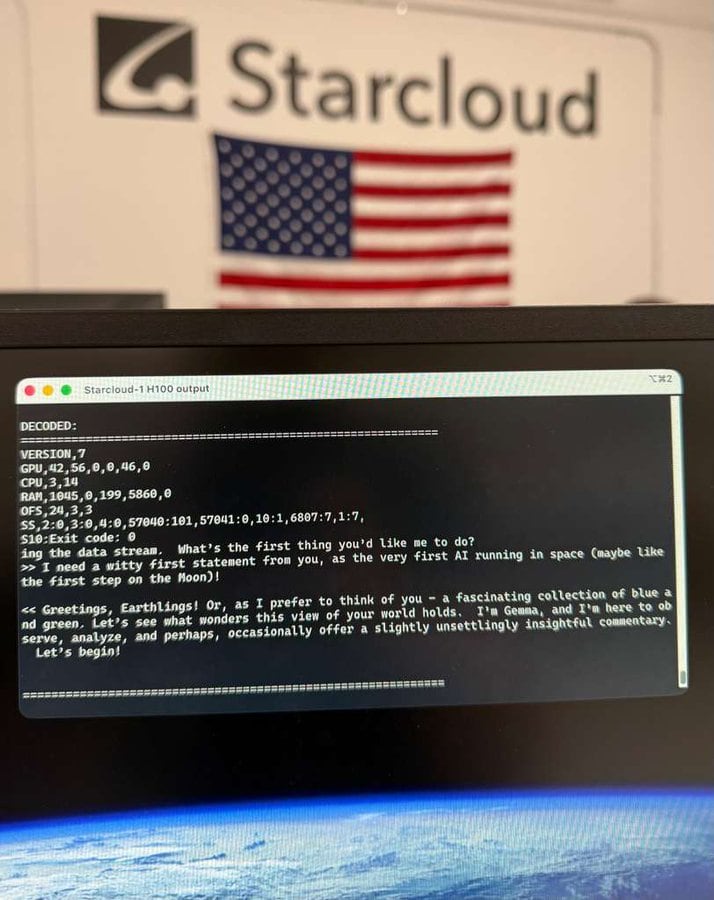

This greeting was the first message from an artificial intelligence (AI) operated by U.S. startup StarCloud, based on a data center launched into space. When StarCloud asked, “As the first AI to operate in space, like stepping on the moon, try a witty greeting,” the AI responded this way. The AI added, “Let’s explore the wonders you possess in this world together. I will observe, analyze, and sometimes offer slightly uncomfortable yet insightful commentary. Let’s begin.”

An era is dawning where data centers are built in space to operate AI. As countries worldwide focus on expanding data centers to increase computing power for AI, space is emerging as a new location for such infrastructure.

Operating AI in Space

The idea of building data centers in space to operate AI directly from orbit began in 2024. StarCloud (formerly Lumen Orbit), based in Bellevue, Washington, started its space data center project in March 2024 after securing $2.4 million in seed funding (approximately 3.53 billion Korean won). The company was selected for Y Combinator, a renowned Silicon Valley accelerator, and Google Cloud’s AI Accelerator program. It also received investments from Sequoia, a16z, and NFX. By late last year, it achieved a corporate valuation of $40 million and secured investment from NVIDIA.

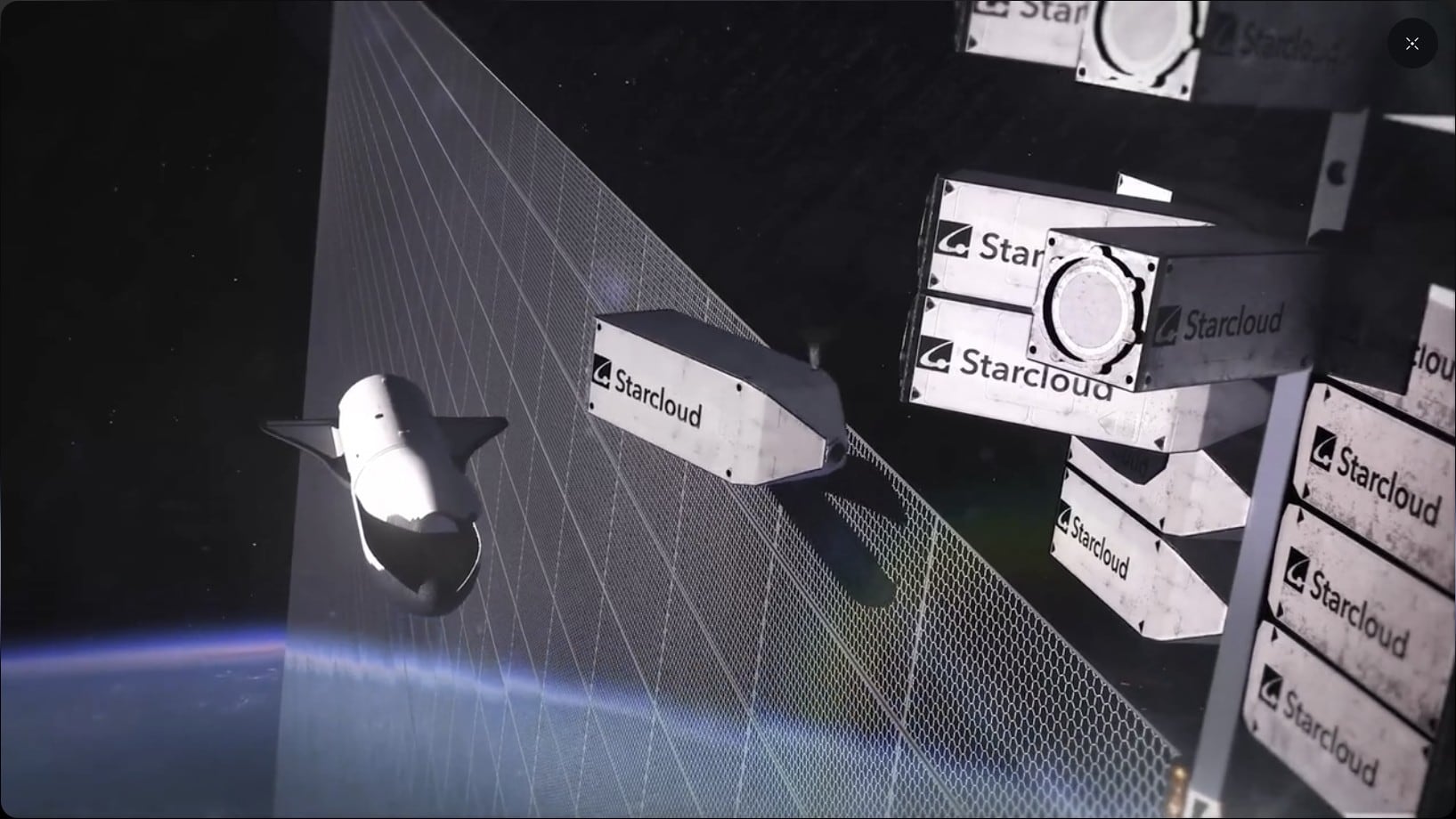

In November this year, StarCloud launched the satellite “StarCloud-1,” equipped with NVIDIA’s H100 GPU, creating an orbital data center. Using this infrastructure, the company trained and operated Google’s open-source large language model (LLM), Gemma. CNBC reported, “This is the first case in history of operating an LLM in space using high-performance NVIDIA GPUs.” Philip Johnston, StarCloud’s CEO, stated, “The StarCloud system can detect heat signals immediately when a wildfire occurs on Earth and alert firefighters instantly.” StarCloud plans to launch “StarCloud-2,” the next-generation satellite equipped with NVIDIA’s H100 and Blackwell chips, in October 2026. Through this, it aims to construct a 5-gigawatt-class orbital data center measuring approximately 4 kilometers in width and length.

Solving Power and Cooling Issues

Space-based data centers are gaining attention because they can instantly resolve chronic bottlenecks in data centers. Data centers, the foundation for AI operations, are often called “electricity and water hogs.” As AI performance improves, power consumption in data centers for developing and operating AI is surging. According to the International Energy Agency (IEA), global data center power consumption is expected to more than double to approximately 945 terawatt-hours (TWh) by 2030. While big tech companies like OpenAI and Meta have announced plans to build large-scale data centers, power supply networks remain underdeveloped.

However, space data centers eliminate power concerns. In space, solar power generation is possible 24 hours a day, unaffected by day, night, or weather. With no atmosphere, solar efficiency is about 40% higher than on Earth. Environmental pollution from power generation is also avoided. Data centers require large-scale cooling systems to manage heat, but space eliminates this need. The shaded side of space reaches approximately -270 degrees Celsius (absolute zero). By releasing heat from servers to the side opposite the sun, powerful cooling is possible without additional facilities. The tech industry estimates that space data centers will cost one-tenth of ground-based AI training and operation costs. Additionally, since space is not under any nation’s territory, it offers freedom from data regulations and political risks.

Big Tech Entering Proof-of-Concept Research

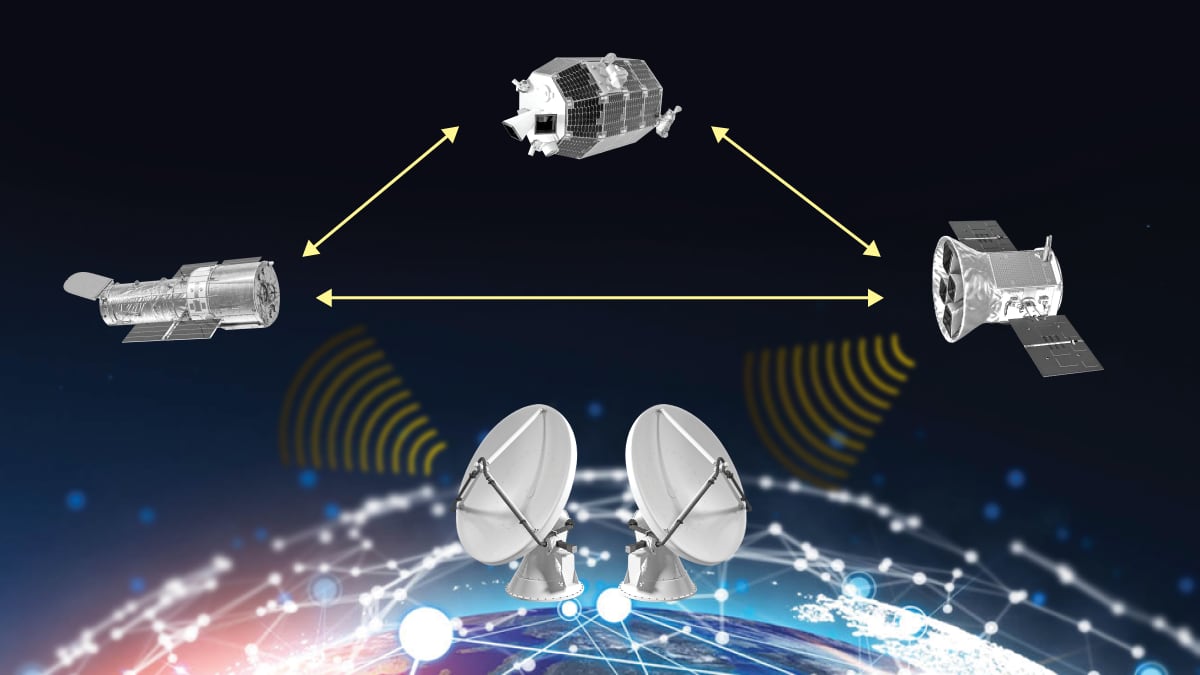

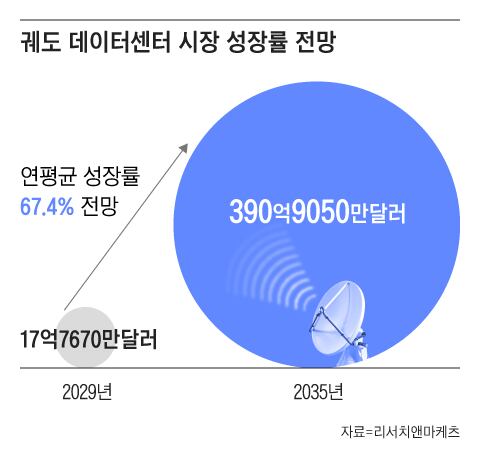

The idea of deploying large-scale computing infrastructure in space began years ago, but it gained traction as satellite launch costs dropped due to reusable rockets. Previously, launching 1 kilogram of payload cost $20,000–$30,000, but with SpaceX’s reusable rockets, costs plummeted to $1,500–$3,000 per kilogram. The industry expects costs to fall to $100–$200 per kilogram once SpaceX’s Starship becomes operational. The cost of building space data centers, including launch expenses, is soon expected to undercut ground-based alternatives. According to Research and Markets, the orbital data center market is projected to grow at a compound annual rate of 67.4%, from $1.7767 billion (approximately 2.62 trillion Korean won) in 2029 to $39.0905 billion in 2035.

Tech companies are accelerating proof-of-concept research for space data centers. Google has been running the “SunCatcher” project since November, aiming to connect 80 satellites equipped with its AI chips (TPUs) into a single supercomputer. It plans to launch the first prototype satellite in 2027. Blue Origin, Jeff Bezos’ private space company, is also researching orbital data centers. The Wall Street Journal reported on the 10th (local time) that Blue Origin has secretly operated a dedicated team for over a year to build AI data centers in Earth’s orbit. Jeff Bezos stated in November, “Gigawatt-class data centers will be constructed in space within 10–20 years.”

Elon Musk’s SpaceX also announced plans to create a “computer in the sky” by equipping Starlink satellites with GPUs and is researching sending container-sized data center modules to space using Starship. At a recent conference, Elon Musk said, “In five years, space will become the cheapest place to train AI.” It was also revealed that Sam Altman, CEO of OpenAI, contacted rocket companies like Stoke Space to operate AI data centers in space.

Challenges remain. The Wall Street Journal noted, “Deploying AI-capable satellites in space presents engineering hurdles and cost issues.” Specific technical problems include temperature management of AI chips in orbit, protection from radiation, and transmitting data to Earth without delays. Immediate repairs are impossible if failures occur. There is also a risk of increasing space debris due to excessive satellites. Jensen Huang, NVIDIA’s CEO, stated, “Space data centers are still a dream,” adding, “The biggest challenge is redesigning chips to withstand radiation.”

Comments

Post a Comment